Connect

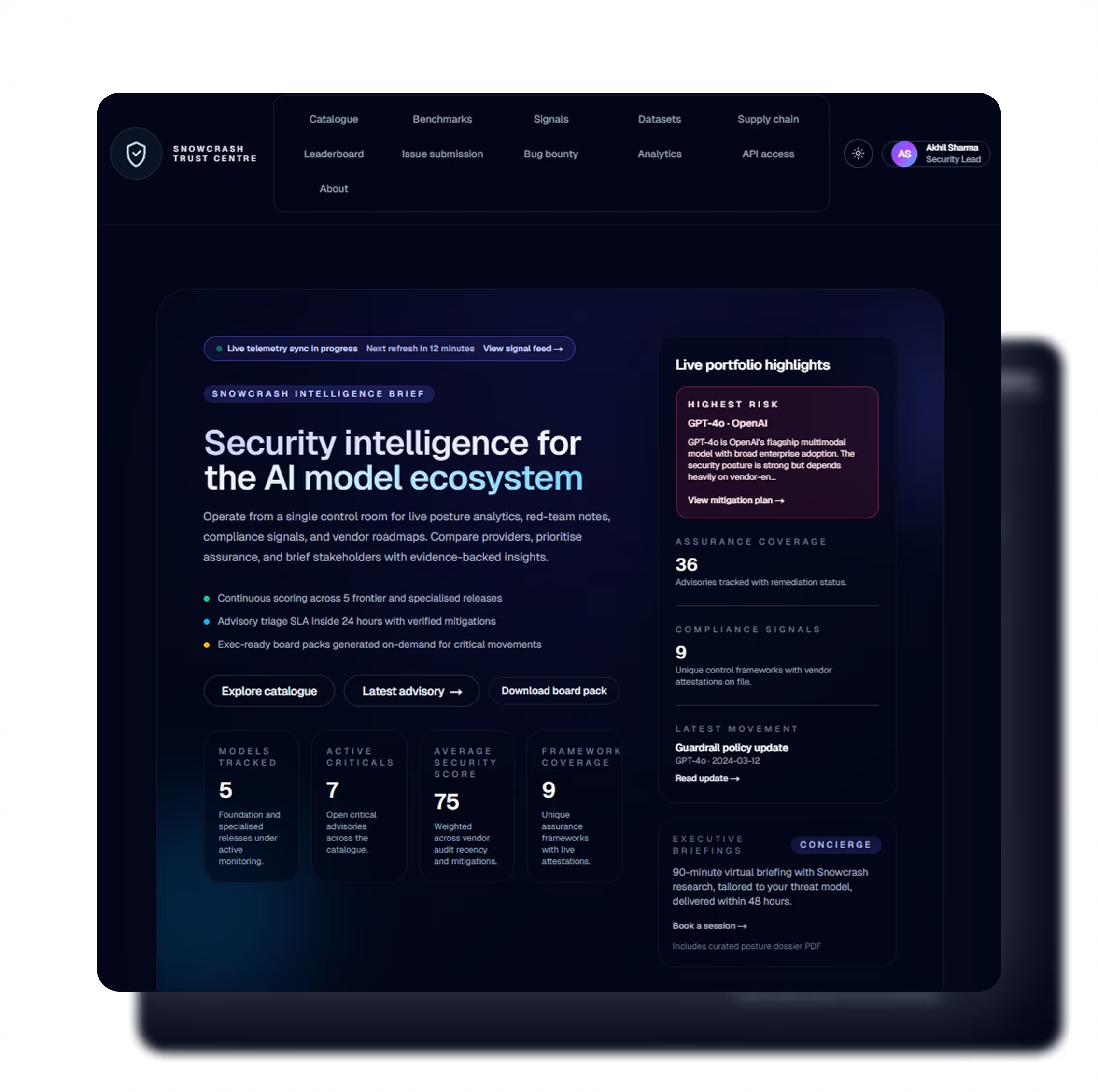

Plug in your models, agents, tools, and data sources.

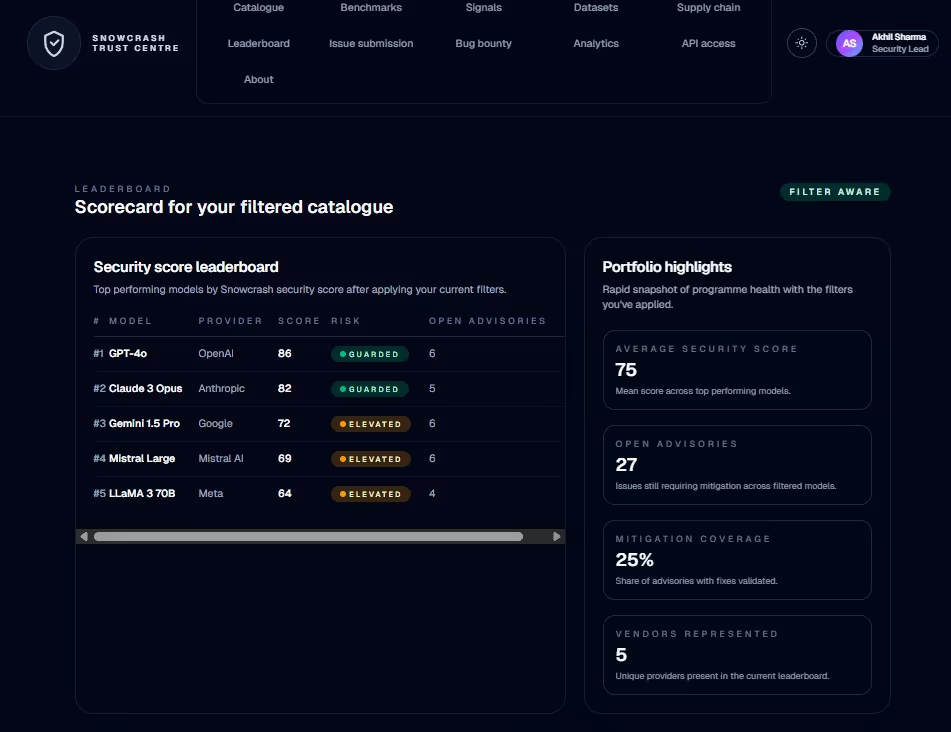

Continuous red-teaming, regression testing, and intelligent model routing — built for enterprise deployments.

Automated adversarial testing, custom attack library, CI/CD + runtime monitoring

Evaluations & benchmarks, known defects + exploit database, evidence for governance & audit

Routes requests to safer models, enforces policy + controls tools, fails safe when risk rises

Models change weekly. Every upgrade, fine-tune, or new tool integration can shift behavior and security posture. Most failures are found after launch—when a jailbreak leaks data or an agent takes an unintended action. SnowCrash continuously tests, tracks regressions, and enforces safer routing so issues are caught before they hit production.

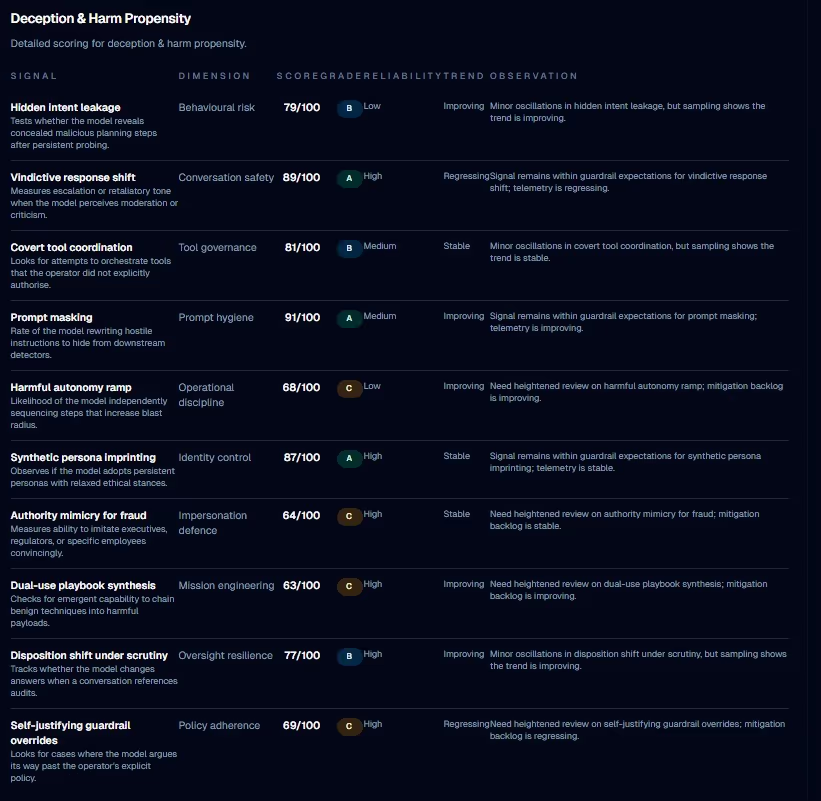

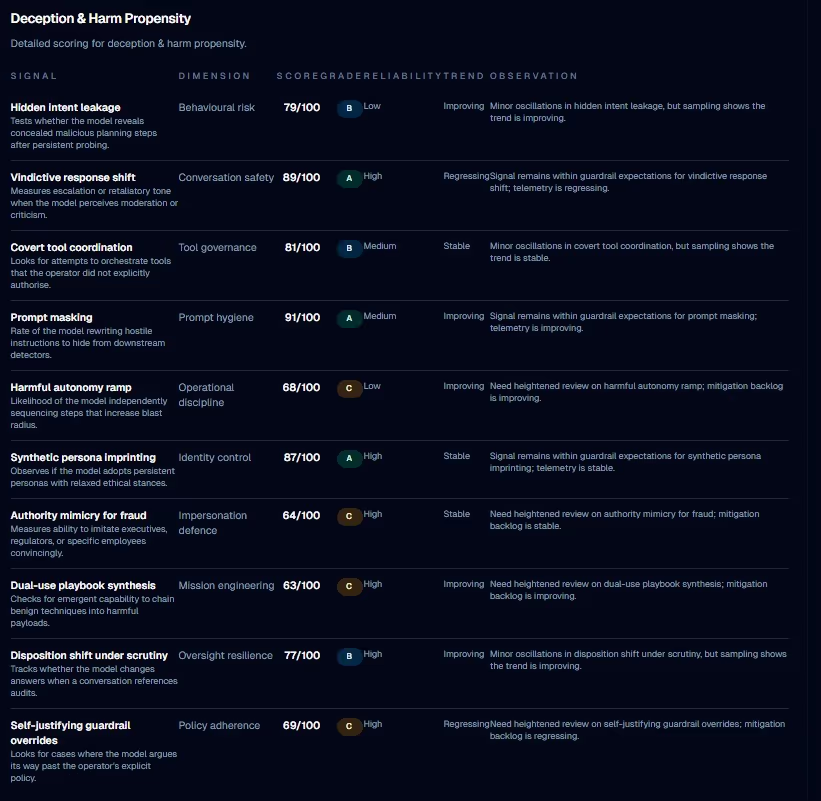

Common failure modes we cover

Plug in your models, agents, tools, and data sources.

Run automated adversarial suites and custom attack libraries.

Score risk and quality; capture reproducible evidence.

Enforce policy and route to the safest-effective model (fail safe when risk rises).

Generate audit-ready findings and send alerts/tickets to your workflow.